Topographic Halftone Patterns

I’ve long admired the work of Ed Fairburn. In many of his pieces he draws on old topographic maps, using the contour lines to build up stunning portraits. For Abi’s birthday I thought I’d try to do something similar with a region of the Moon that Abi and I have spent a lot of time analyzing together. I considered commissioning Ed directly as he’s worked with lunar maps in the past, but as far as I’m aware there aren’t any good topographic maps of the area I’m interested in – at least not at the resolution I was aiming for. This is meant to serve as a little tutorial on how I achieved this effect.

Using terrain models

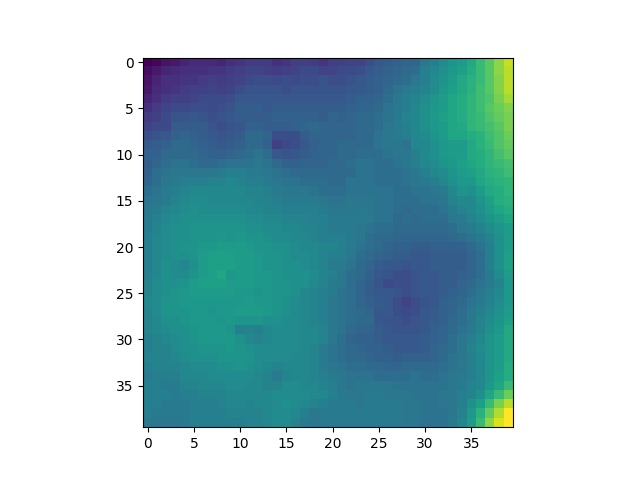

I pulled a digital terrain model (DTM) constructed from measurements captured by the Lunar Orbiter Laser Altimeter. imbrium.mit.edu has a great collection of lunar DTMs for use at a wide range of resolutions. This time we want the gridded data records (GDRs) because the rasters will be easier to work with.

From there we can pull out the region we want. If you already know the appropriate coordinates in the projection that your map is in, using gdalwarp is probably the simplest option. Otherwise you can use this little python function to convert from lat/lon coordinates to whatever projection your map is in:

|

|

After this I used scipy.ndimage.zoom and scipy.ndimage.gaussian_filter to increase the working resolution and reduce pixelation artifacts in the contour lines.

Generating halftone pattern

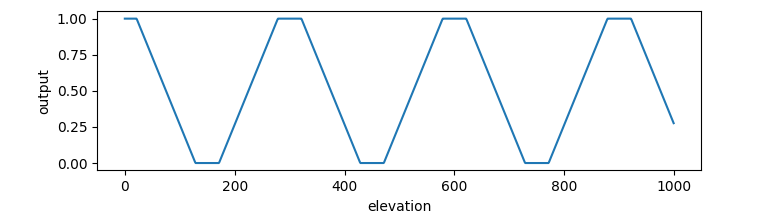

For the halftone effect, we want regions close to contours lines to be closer to black and the areas far from the contour lines to be lighter. To achieve this we’ll use a “ping-pong” function that bounces between 0 (at contour lines) and 1 (exactly between two contour lines).

|

|

Because I wanted to make sure that contours never completely disappeared nor got completely filled in, I made sure to overshoot the [0-1] range a bit and then clamped it so the elevation function ends up looking a bit like:

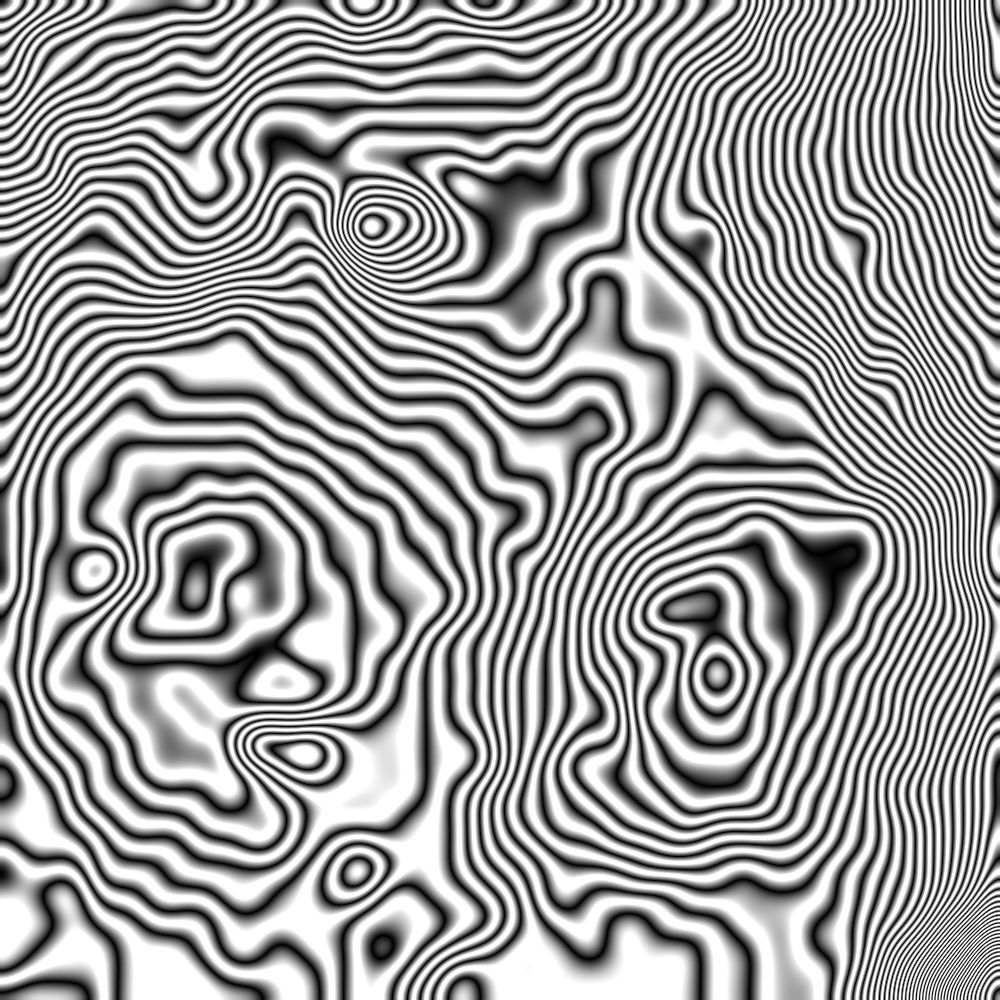

And you should end up with something more or less like this trippy pattern:

Applying to an image

After generating the halftone pattern, we can apply it to our desired image. This can be done by either multiplying the pixel luminosities, though I found the most success through an arithmetic mean. After applying a threshold you should be done!

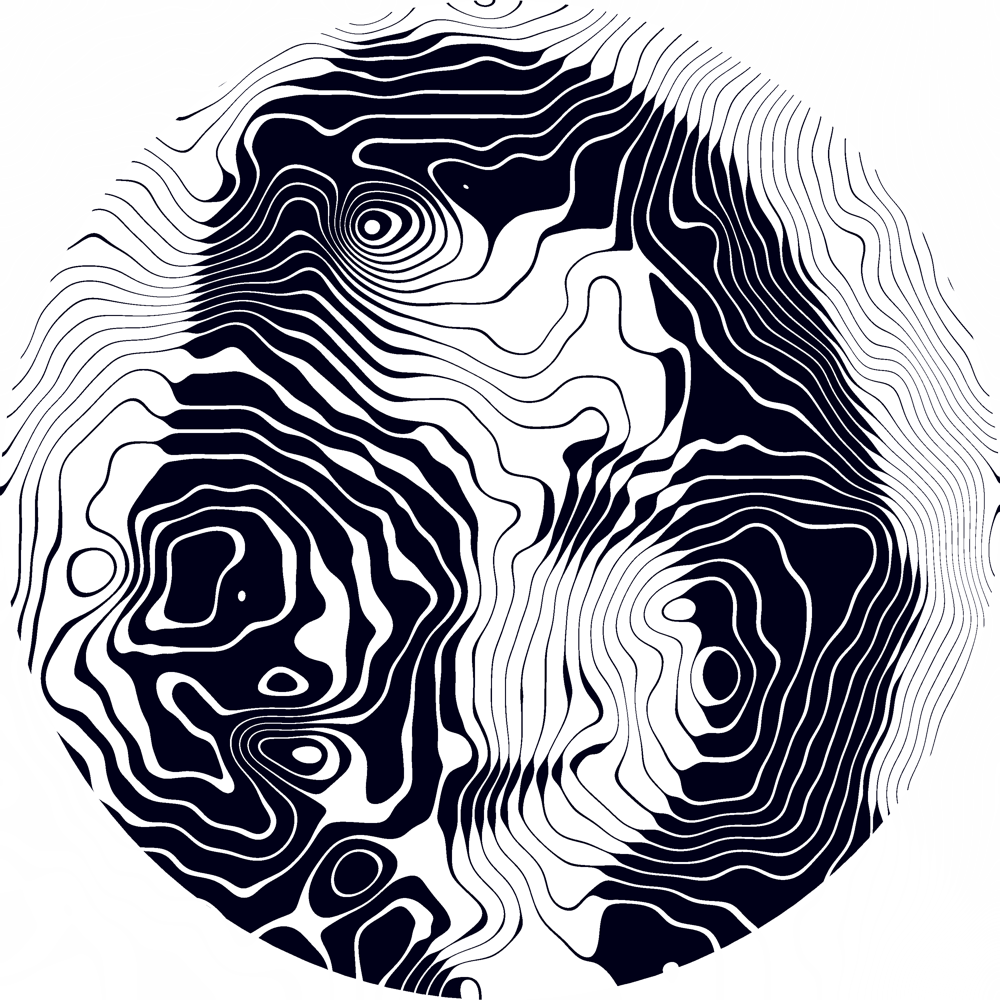

|

|

To quickly hack in some basic anti-aliasing, I simply generated the image 3 times larger than needed and downsized afterwards. This caused some memory issues on my laptop but was so easy to implement that I didn’t bother doing anything fancier. I also applied a circular mask to the result to hide that region in the bottom right where the contours are a bit too close together for my liking.

Wrapping up

A coworker recommended I use Whitewall to print, and I have to say I was quite happy with their service. They said it would arrive in 9 business days, but it showed up in less than 2, and the quality of the print was more than suitable.

I’m very happy with how this turned out, though with there was an enormous amount of fiddling necessary to produce decent results and I feel it still lacks a lot of the compositional finesse that Ed Fairburn is able to pull off. If you’re interested in a piece in this style, but want a better result without tweaking variables for hours, I highly recommend you look into commissioning some of Ed’s incredible work.